The purpose of this lab exercise was to teach how to perform key photogrammetric tasks on aerial photographs and satellite imagery. It covered the mathematics behind the calculation of photographic scales, measurement of areas and their perimeters, and calculating relief displacement in images. The latter portion of the lab was designed to focus on performing orthorectification on satellite images. These processes are extremely relevant in the field of remote sensing and as the instructor, Professor Cyril Wilson, explained, with the skills learned in this lab anyone in the class would be able to get a job working with remote sensing technologies. This lab was much more technical than previous labs and therefore required more time and precision as well.

Methods:

Part 1:

This first section of the lab covered map scales, measurement of these scales and objects in the image, and calculating relief displacement of an image.

The first required task of this section was to calculate the scale of an aerial photograph of the city of Eau Claire (Figure 1). The distance from point A to point B had been calculated in the real world to be 8822.49'. From here the scale of the aerial photograph was found by measuring out the distance from point A to point B in the image, which came out to be 2.65". The scale was then calculated by looking at the difference between the two measurements. 8822.49'=105869.64". This gives that every 2.65" on the image covers 105869.64" in real life. Dividing these two numbers by 2.65 gives a scale of approximately 1:39,950.

The scale in a similar aerial image of Eau Claire, WI was then calculated using the focal length of the camera lens, the altitude of the aircraft, and the elevation of Eau Claire County. The focal length of the camera lens was given as 152 mm which was converted to .152 m. The altitude of the aircraft was given as 20,000 feet which was converted to 6096 m. The elevation of Eau Claire County was then given as 796 ft (242.6 m). The scale was calculated by subtracting the elevation of Eau Claire County from the aircraft elevation, then dividing the camera lens length by this value. The numbers came out that the scale equaled 1:39,265.

It was then required to calculate the relief displacement of a zoomed in portion of an aerial photograph of an area near the University of Wisconsin-Eau Claire campus (Figure 3). This involved knowing the scale of the aerial photograph (1:3,209), knowing the height of the camera above the datum (47,760"), measuring the distance on the image from the principle point, the point of which the camera of the aircraft is centered on (10.5"), and calculating the real life height of object A (the smokestack [1123.15"]), this was calculated by measuring and using the image scale. By taking the the height of the smokestack multiplying it by the distance from the smokestack on the image and dividing it by the height of the airplane above the datum the relief displacement of the smokestack on the image was found to be .246" away from the principle point.

Part 2:

This portion of the lab involved creating a stereoscopic image using an orthorectified image and a DEM (Figure 4) of the city of Eau Claire. Using the Anaglyph Generation tool in ERDAS, the DEM and the image were input and an output of a stereoscopic image was created (Figure 5), which can be viewed when wearing Polaroid glasses.

|

| The left is the orthorectified image of Eau Claire, WI while the right is a DEM of the same area. These two images were input in the Anaglyph Generation tool to create Figure 5. (Figure 4) |

Part 3:

This large portion of the lab involved orthorectification of images using ERDAS Imagine Lecia Photogrammetric Suite (LPS) (Figure 6)

.

|

| This is the LPS window after the image to be orthorectified has been input, though the image can't be seen yet as Ground Control Points (GCPs) need to be collected. (Figure 6) |

Two SPOT satellite images of Palm Springs, California were required to be orthorectified. LPS Project Manager was opened and the imagery needing to be orthorectified was added after ensuring that all settings in the project were set to ideal values. From here the Classic Point Measurement Tool (Figure 7) was opened to collect GCPs on the first image of Palm Springs. The reference image was then brought in (Figure 8). The reference image in this case was an image of Palm Springs which had already been orthorectified.

|

| The Classic Point Measurment Tool can be used to orthorectify an image by creating GCPs and generating tie points. Here, the input image that is required to be orthorectified can be seen. (Figure7) |

A total of nine GCPs were collected between these two images (nine on each image). Another separate reference image was then added in order to ensure accuracy and a final two GCPs were added. The GCPs then had their Type and Usage changed to Full and Control to properly designate the points. A DEM was then brought in to create a z (elevation) value for the GCPs.

|

| Some of the set control points are listed here. The x and y references were set using the two orthorectified reference images while the z reference was set using a DEM. (Figure 9) |

The other image that was required to be orthorectified was then brought in and had GCPs were created to help orthorectify both images. These GCPs used the already placed GCPs in the first image and placed on the second image (Figure 10). From here tie points could be created.

|

| The triangles represent GCPs between the two images to be orthorectified. The area where they overlap is where the GCPs will be drawn from to create one output image. (Figure 10) |

|

| The Triangulation Tool created tie points between the two images which can be seen in Figure 12. (Figure 11) |

Discussion:

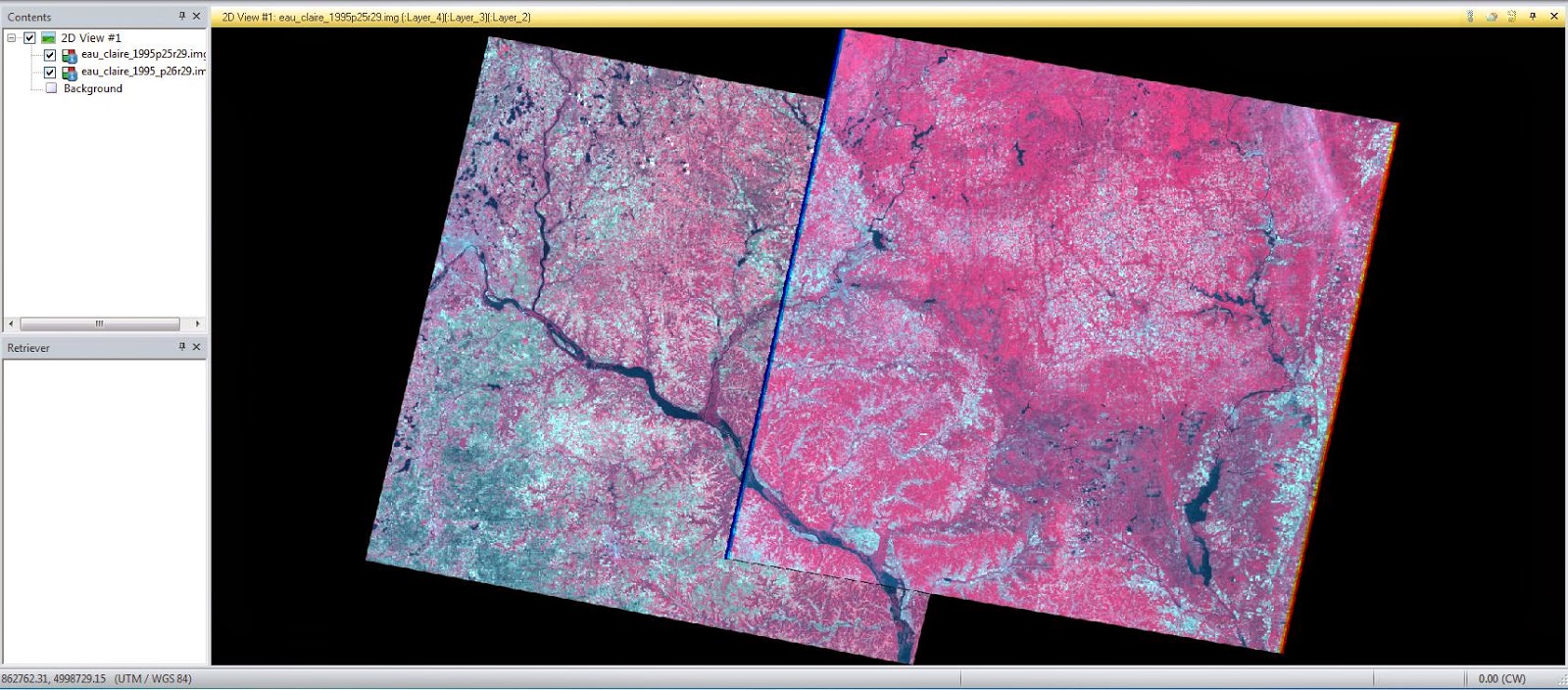

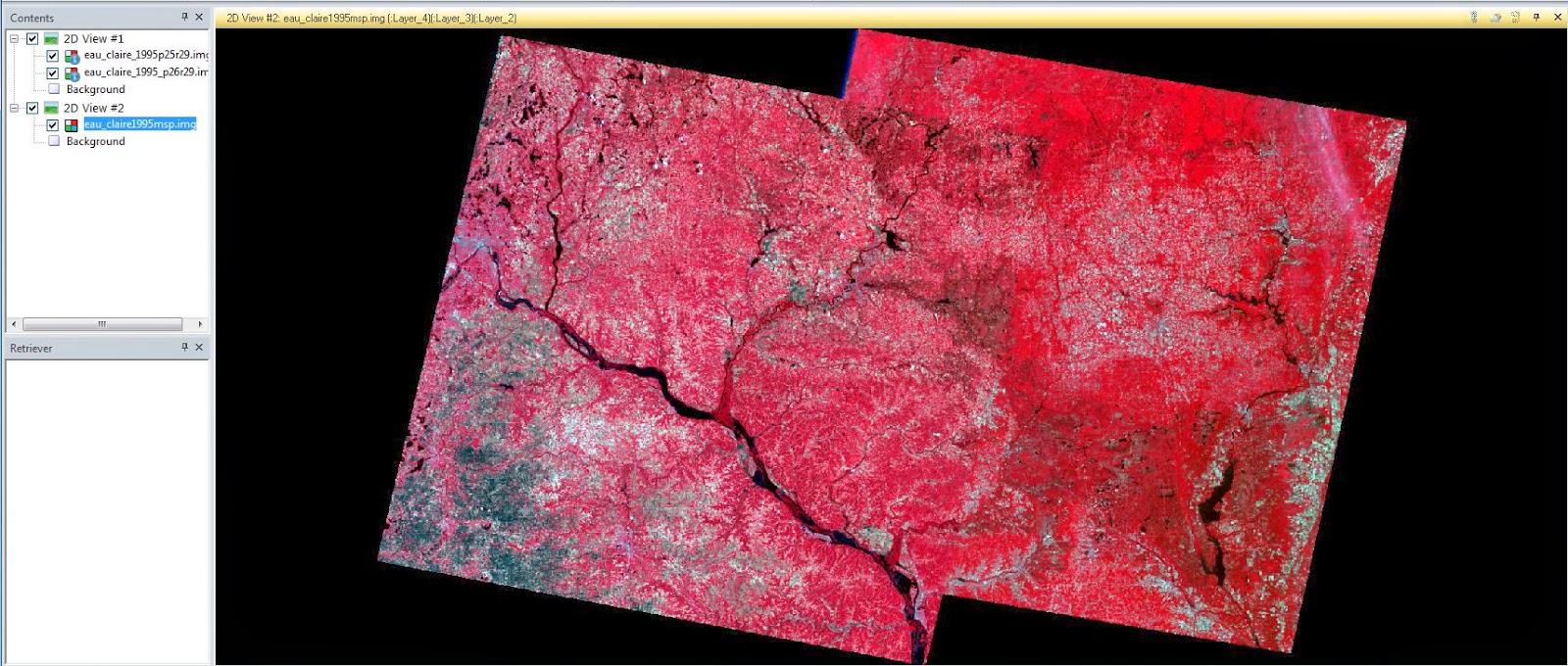

The process of creating the final orthorectified image was rather painstaking and required the use of a large amount of GCPs and tie points and a high degree of accuracy. However, this paid off greatly as the two input images fused together almost seamlessly (Figure 14).

Conclusion:

All of the skills learned in this lab are extremely technical. Not only that but they are extremely useful and powerful as well. Knowing how to do what was learnt in this lab well can lead to obtaining a job as orthorectification and the other skills learnt are extremely useful. It's rare to find people who possess the knowledge and skills to perform these tasks. This lab taught these skills well and they should be repeatable in the future by any member of the class who completed them.